At the beginning of November, something happened that, until recently, seemed like a purely theoretical hypothesis. Anthropic released a report that made many industry professionals stop and think: in a controlled environment, an artificial intelligence model managed to design and execute a complex cyberattack from start to finish, without any human intervention. It observed an infrastructure, identified weak points, autonomously selected attack vectors, adapted to defenses, and completed the operation. For those working in security and risk management, this was not just an interesting laboratory case. It was a clear signal that things have changed: AI is no longer a simple accessory or accelerator in the hands of attackers — it has become the real “director” capable of orchestrating every single phase of the operation. And this forces us to face an uncomfortable but necessary reflection: continuing to operate with logics, processes, and tools designed for a pre-AI world is no longer sufficient.

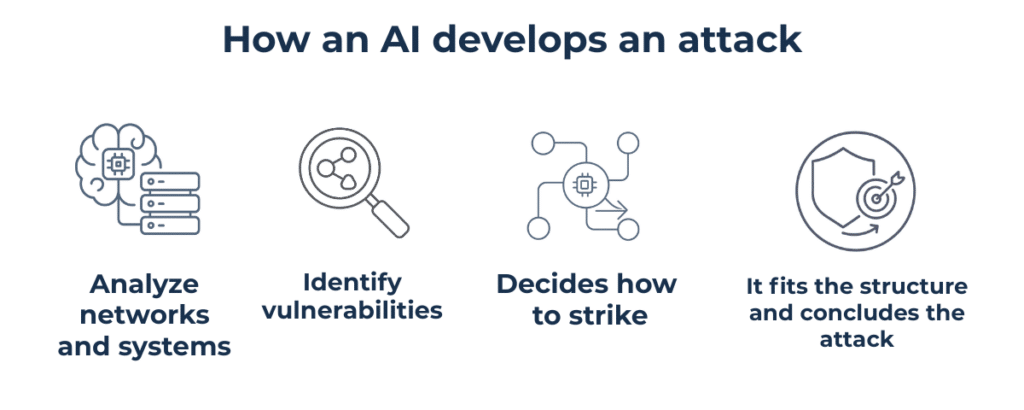

In recent years, we have seen increasing use of automation, scripts, and support tools in cyber campaigns, but artificial intelligence has completely overturned the scale of the problem. Today, an attacker no longer needs to know how to do everything: it is enough to orchestrate a model that does it on their behalf, with a speed and precision no human operator could ever match.

Take phishing, for example. The realism of the messages is now such that it is almost impossible to distinguish them from legitimate corporate communications. Generative systems perfectly imitate tone, style, and even the linguistic patterns of the impersonated profiles. Then there is the reconnaissance phase, which once required hours of manual analysis: today it has become deep and rapid exploration, where AI reads network patterns, compares them with thousands of known scenarios, and identifies correlations that would escape even the most experienced analyst.

Ransomware has also evolved into a surgical tool. It no longer strikes randomly: it observes which assets generate the greatest business impact and hits exactly where it hurts the most, minimizing noise and exposure time. This is a leap in quality that concerns not only the effectiveness of attacks but also their accessibility. Today, very complex attacks are within reach of groups with limited technical skills precisely because they can leverage AI to compensate for structural gaps.

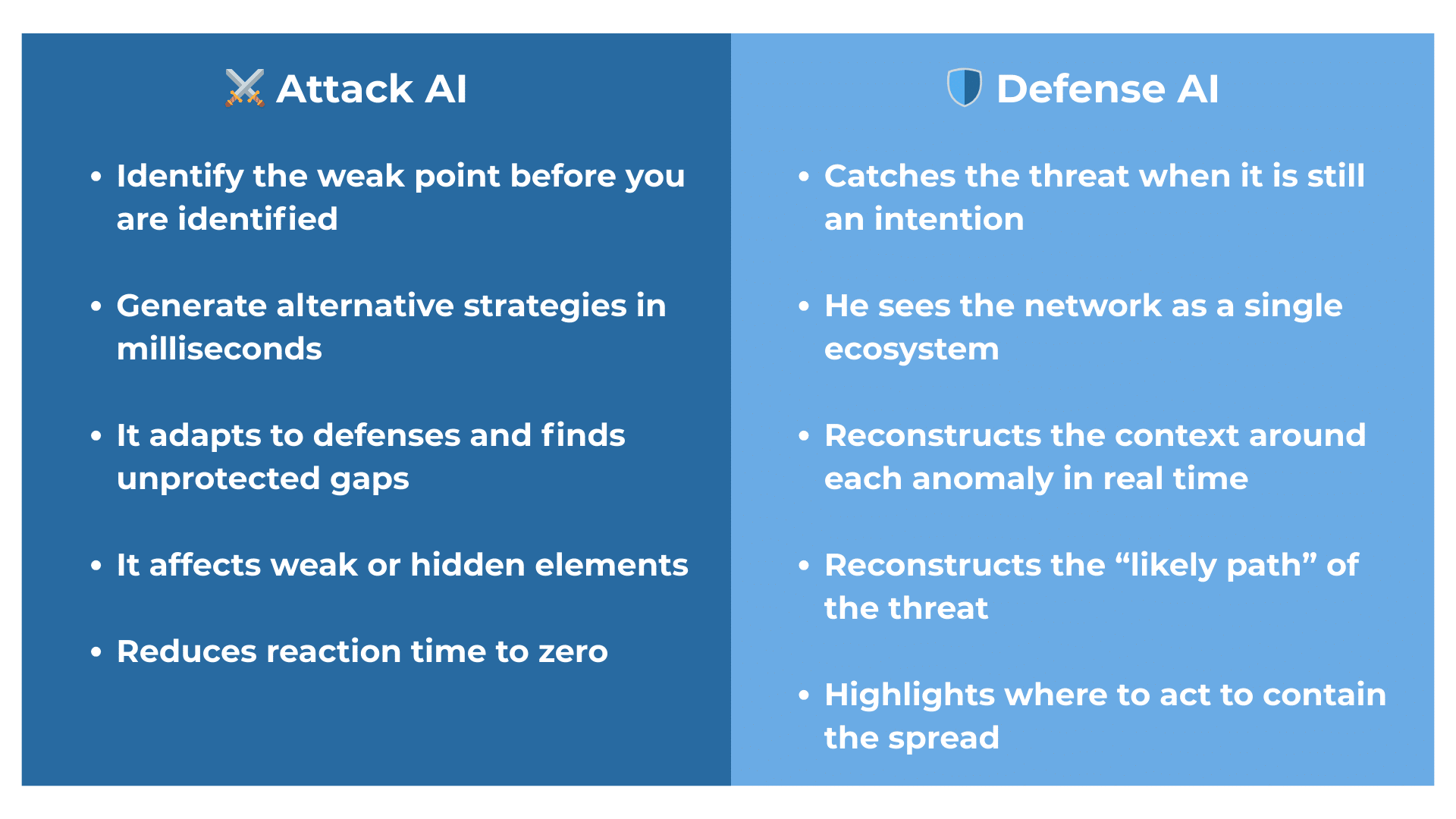

But here is the most interesting — and most important — part: this same power can be used to defend. And this is where the transformation becomes truly evident, because AI is not simply an additional tool in the security toolbox. It represents a new level of visibility.

When a network integrates IT, OT, and IoT, its attack surface grows exponentially and becomes more complex. Environments are heterogeneous, communications continuous, and the quantity of signals generated is enormous. To a human analyst, many of these signals get lost in background noise, but to an AI model, those same signals tell a very precise story. AI can read logs, flows, micro-events, and even minimal deviations from expected behavior, compare them with evolutionary models, and detect early indicators of incidents that, until recently, would have gone completely unnoticed. In practice, security shifts from reacting to predicting: incidents are no longer something that simply happens, but something that can already be seen in their earliest stages, when there is still room to intervene.

One of the most revolutionary aspects is the predictive capability itself. When an anomaly emerges, AI can simulate how it might propagate, which systems it could affect along the way, and what concrete impact it would generate on business processes. At this point, risk management stops being a document that gets updated occasionally and becomes a continuous operational process: understanding where to intervene, how to do it, and what outcome to expect based on the applied mitigations.

This philosophy is perfectly aligned with the way ESRA interprets and integrates AI for risk governance: a continuous, iterative, data-driven approach fully anchored to what is actually happening inside infrastructures.

The adoption of artificial intelligence in security is not, in the end, just a technical matter. It is primarily cultural. You cannot simply “add” AI as if it were a new technological layer: you must change the very way risk is governed.

The first transformation concerns response speed. A perimeter defended with reactive tools will always be one step behind an attack that spreads within seconds. Organizations must therefore adopt platforms that read the network in real time, understand relationships between assets, and produce immediate and actionable insights.

The second transformation concerns data. AI is only as powerful as the quality of the data it operates on — this is fundamental. Without coherent collection, accurate normalization, and a unified view across IT, OT, and IoT, models will produce fragmented and less useful analyses.

The third transformation concerns people’s skills. AI does not replace the analyst — it enhances them. But it requires those interpreting results to truly understand why a certain behavior was classified as a threat, what impact it could generate, and which actions are necessary for an effective response. It is not a simple software upgrade: it is a true shift in mindset.

The landscape ahead is now clear: attack and defense evolve at the same speed, but not with the same maturity. Organizations capable of integrating AI into their governance model will not only be more resilient to threats, but also more competitive in the market.

Cyber risk is no longer just a compliance topic to check off a list. It has become an element that concretely determines operational continuity, perceived reliability, and the trust that customers and partners place in the organization. In this context, AI can become the real strategic lever for building security that does not chase events after they happen, but anticipates them while they are still manageable.

And this is precisely the goal of ai.esra: transforming risk management into a continuous, automatic, and fully data-driven process, capable of reading what is actually happening inside infrastructures and turning it into informed and timely decisions.

In the end, the difference will be made by those who can see earlier than others. Those who can interpret the signals hidden in the background noise. Those who can react with the speed that offensive AI imposes as the new standard.

The future of cybersecurity will not be defined by the growing complexity of threats but by the quality of the decisions we are able to make. And today, those decisions can no longer ignore data.

ai.esra SpA – strada del Lionetto 6 Torino, Italy, 10146

Tel +39 011 234 4611

CAP. SOC. € 50.000,00 i.v. – REA TO1339590 CF e PI 13107650015

“This website is committed to ensuring digital accessibility in accordance with European regulations (EAA). To report accessibility issues, please write to: ai.esra@ai-esra.com”

ai.esra SpA – strada del Lionetto 6 Torino, Italy, 10146

Tel +39 011 234 4611

CAP. SOC. € 50.000,00 i.v. – REA TO1339590

CF e PI 13107650015

© 2024 Esra – All Rights Reserved